Welcome to largecats' blog

-

Stream Processing 101

This post is based on a sharing I did on general concepts in stream processing.

-

Spark Data Pipeline Framework Management

Data pipelines are widely used to support business needs. As the business expands, both the volumn and diversity of its data increase. This calls for a system of data pipelines, each channelling data for a specific area of the business that may interact in various ways with other business areas. How to manage this system of data pipelines? If they share a common structure, is it worth it to maintain this structure in a separate framework package? How to manage the framework package together with the data pipelines that use it?

-

sbt Multi-Project Dependency

If a project depends on an external project, how to manage the dependency in sbt?

-

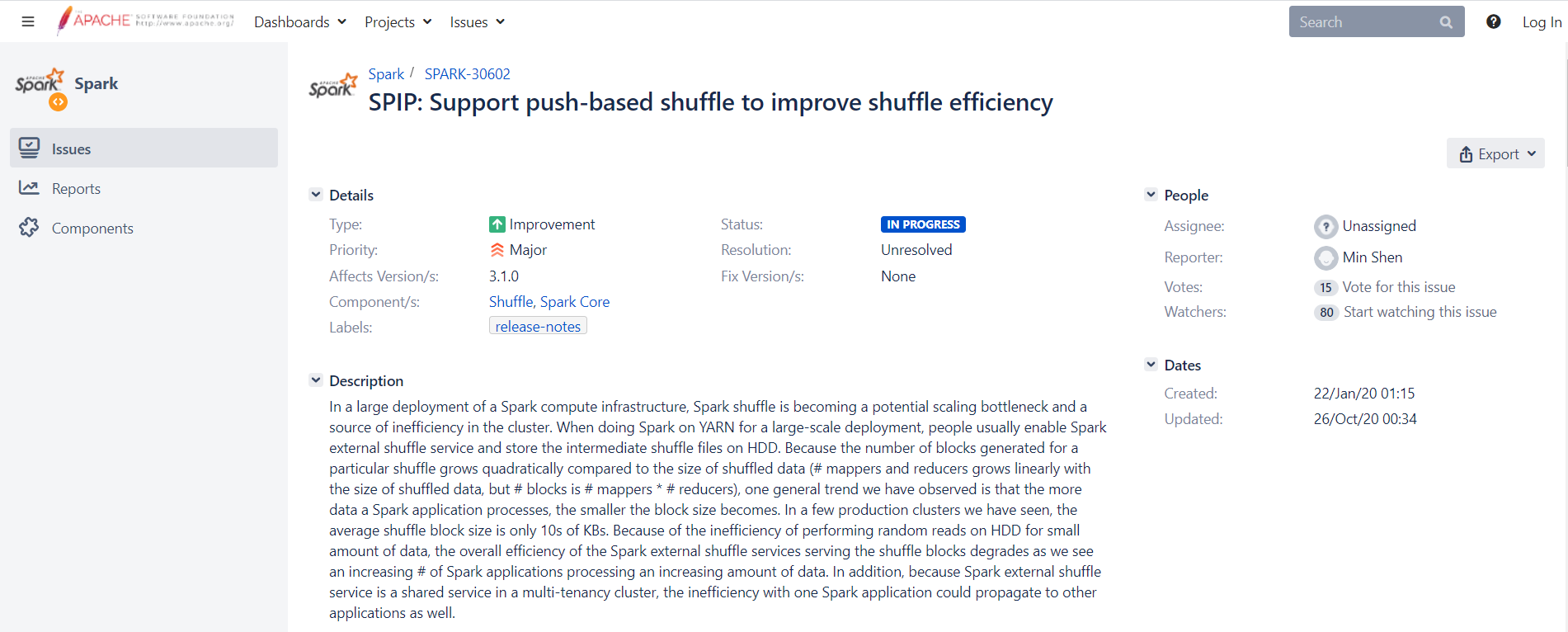

Spark Magnet: Push-based Shuffle

Recently, our data infrastructure team deployed a new version of Spark, called Spark Magnet. It is said to offer 30% to 50% improvement in performance, compared to the original Spark 3.0.

Spark Magnet is a patch to Spark 3 that improves shuffle efficiency:

Spark Magnet's JIRA ticket.

Spark Magnet's JIRA ticket.Provided by LinkedIn’s data infrastructure team, it makes use of the Magnet shuffle service, which is a novel shuffle mechanism built on top of Spark’s native shuffle service. It improves shuffle efficiency by addressing several major bottlenecks with reasonable trade-offs.

-

Spark Partitions

Spark partitions are important for parallelism.

-

Variances in Type Systems

The concept of variance is used to describe relations among subtyping. Subtyping is a relation defined between two types

SandT, such that ifSis a subtype ofT(S <: T), then a thing of typeScan be used in any context where a thing of typeTis expected. E.g., ifCatis a subtype ofAnimal, aCatcan replace anAnimal.But how should the following be related?

Collection[Cat]vsCollection[Animal]get_cat: () -> Catvsget_animal: () -> Animalprint_cat: Cat -> ()vsprint_animal: Animal -> ()

This is where variance comes in. Variance refers to how subtyping of a more complex type relates to subtyping of its component type.