Timeout errors may occur while the Spark application is running or even after the Spark application has finished. Below are some common timeout errors and their solutions.

Errors and solutions

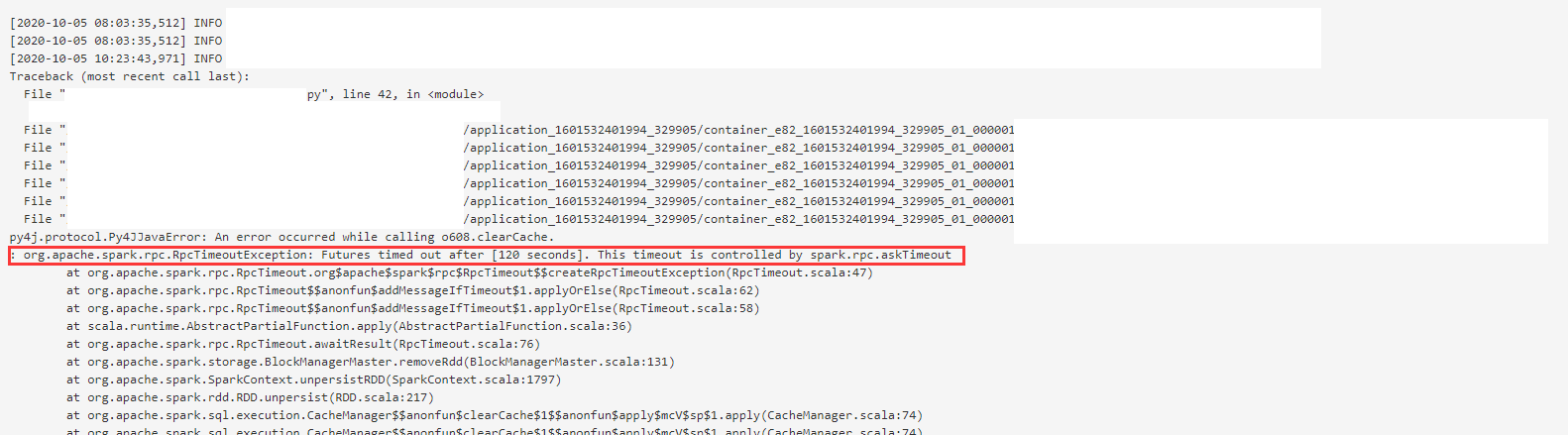

spark.rpc.RpcTimeoutException

As suggested here and here, it is recommended to set spark.network.timeout to a higher value than the default 120s (we set it to 10000000). Alternatively, one may consider switching to later versions of Spark, where certain relevant timeout values are set to None.

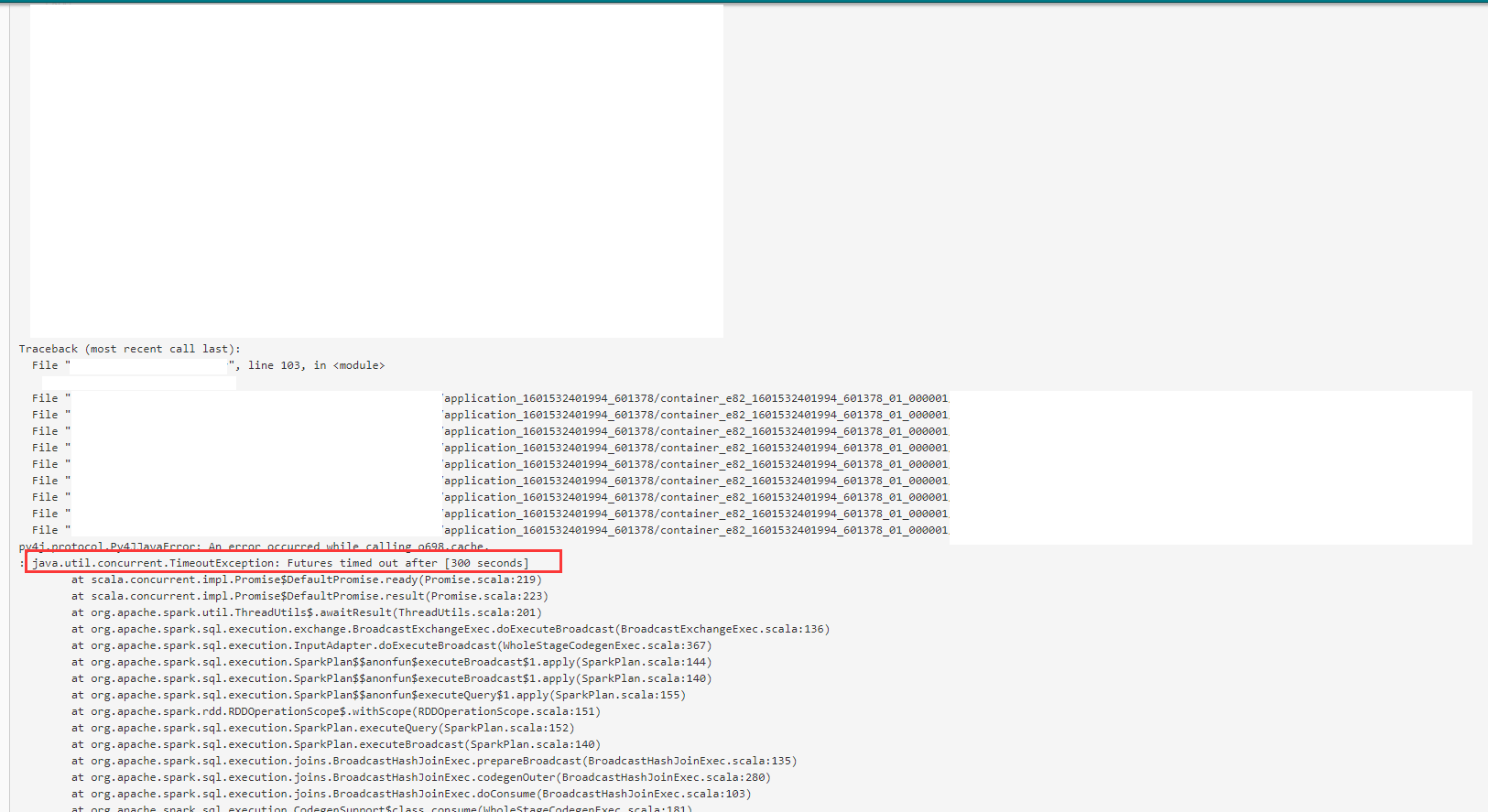

java.util.concurrent.TimeoutException

We observed that this error usually occurs while the query is running or just before the Spark application finishes.

As suggested here, this error may appear if the user does not stop the Spark context after the Spark program finishes and ShutdownHookManger would have to stop the Spark context in 10s instead. A simple solution is to call sc.stop() at the end of the Spark application.

As suggested here, join operations on large datasets may fail with spark.sql.broadcastTimeout. Assuming that the joins have been optimized to a reasonable extent, a simple solution is to set a higher value than the default 300s (we set it to 36000).